Google recently revealed an incredible breakthrough in quantum computing with its new Willow chip. What drew the most attention, though, was Google’s claim that it supposedly managed to access an alternate universe, proving that parallel universes exist.

But does the multiverse really exist? What is Google’s new Willow chip, and what part of the breakthrough is real vs hyperbole?

To understand that, it’s important to understand what quantum computing is, the challenges it faces, and what role the current discovery plays in the future of QCs (quantum computers).

What Are the Current Challenges of Quantum Computing?

Quantum computing has a lot of potential, but in its current state, it is unusable for most practical real-world scenarios.

Let’s start with a brief explanation of the difference between regular computers and quantum computers.

Regular computers use bits of 0s and 1s. They could be in one of two states – 0 or 1, also referred to as true or false.

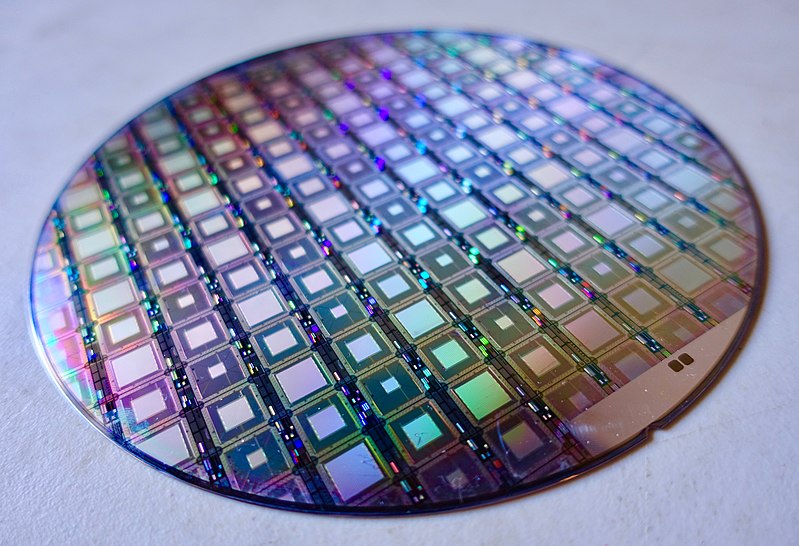

Quantum computers, on the other hand, use qubits, which could also be in states in between 0 and 1 as well, allowing for exponentially faster computational time.

The problem with quantum computing, though, is that it has a very high error rate due to the high level of “quantum noise” these qubits produce. As more qubits are introduced, the error rate increases as well, to the point that quantum computers are not yet usable for solving real-world problems.

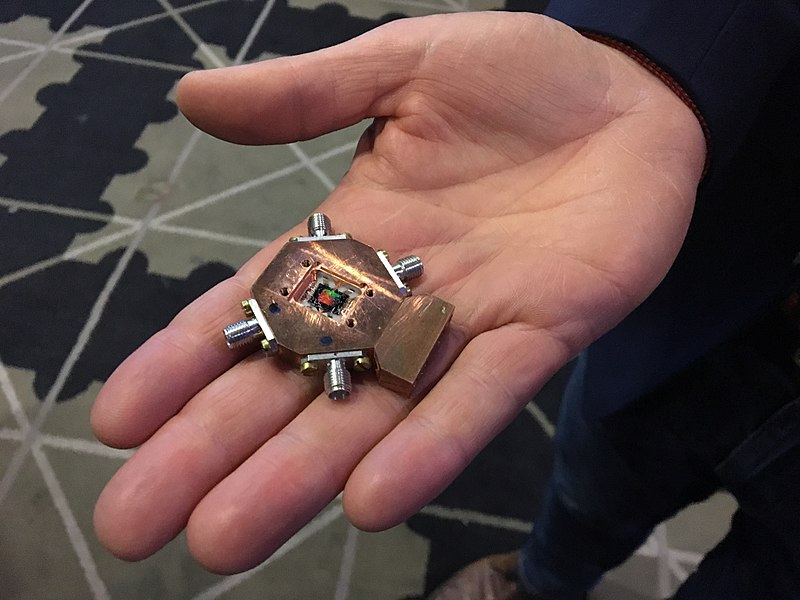

To date, only very small quantum computers, which are not powerful enough for standard computing, are free of errors to an acceptable point.

It is believed that once quantum computers do become powerful enough, they can perform advanced computations that can lead to significant breakthroughs in cryptography, medical research, genetic research, and drug discovery, to name just a few fields.

What Is Google’s Breakthrough?

Google’s breakthrough is one that is 30 years in the making: a successful attempt at increasing the number of qubits, and thus the computational power of quantum computers, without increasing the error rate.

According to Google, Willow allows scientists to reduce errors exponentially as qubits are scaled up.

The second breakthrough, according to Google’s blog post, is, quote:

“Willow performed a standard benchmark computation in under five minutes that would take one of today’s fastest supercomputers 10 septillion years.”

Now this is where it gets interesting. According to Hartmut Neven, founder and lead of Quantum AI at Google, since this is older than the age of the universe, it “lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse.”

Regardless of whether that’s true or just hyperbole, the fact remains that this is a significant breakthrough. Although it doesn’t have many real-world applications at the moment, a key challenge in quantum computing – reducing the error rate as the size of quantum computers is scaled up – appears to have experienced a significant development.

Can We Now Use Quantum Computers?

Unfortunately, although this is indeed a significant technological advancement, it doesn’t have much real-world application just yet. A lot more work needs to be done before computers with sufficient qubits and computing power can be developed error-free.

Google’s Willow chip has 105 qubits. That’s a lot more than previously possible, and initial testing did show that increasing the qubits actually reduced errors instead of increasing them, but it’s still too small for useful calculations.

For the inventions that quantum computers are hoped to be useful for, millions of qubits are necessary, not hundreds, according to Winfried Hensinger, who is a professor of QC at the University of Sussex, as quoted in CNBC.

One of the biggest areas of computer science in which quantum computing is believed to have a dramatic impact in the future is cryptology. Current cryptographic methods use advanced computational problems that are thought to be in a category known as NP. NP mathematical problems are very complex, which is why they are perfect for encrypting data.

Modern computers can’t solve these problems easily, but quantum computers may be able to.

This isn’t yet a real problem, because QCs are too small at the moment to solve these problems in short amounts of time. However, if they become big enough, and they are shown to solve NP problems in a short amount of time, it will be demonstrated that these problems, which are currently believed to be the “hard” category of NP, are actually in the “easy” category of P.

That would be a significant development, because it would invalidate all of the cryptographic methods we currently rely on, including the RSA algorithm, which is commonly used to encrypt data. If a QC could decrypt them easily, we would need more advanced methods for our sensitive data. The government is already working on solutions for a post-quantum reality in which current cryptographic methods would be obsolete.

However, according to Google Quantum AI director and COO Charina Chou, as quoted in The Verge, we are still at least 10 years away from that. A QC would need at least four million qubits to crack RSA, and the 105 qubits of the WIllow chip are nowhere near enough.

Furthermore, according to Sabine Karin Doris Hossenfelder, a German physicist, Google hyped up its claims a little too much. The computation that was used to prove the contrast between a standard computer and the Willow chip has been shown to be incredibly hard and to take long on a conventional computer. This computational problem was likely chosen deliberately to allow Google to exaggerate how much faster the Willow chip is compared to a quantum computer in its claim of a septillion years’ difference.

In fact, Google performed the same calculation five years ago on a chip with 50 qubits.

Another factor is the significant cooling power required for the Willow chip, which would also be a constraint in developing larger computers.

Final Thoughts

In conclusion, this is indeed a remarkable breakthrough. It paves the way for the scaling up of quantum computers in a way such that errors can be reduced. This would allow quantum computers to reach their full potential.

At the same time, let’s not put the cart before the horse. We are still years away from reliably demonstrating that a quantum computer with millions of qubits, which is what will be needed to solve those big problems QCs are hoped to solve, is possible to operate reliably.